Programming Exercise

Overview

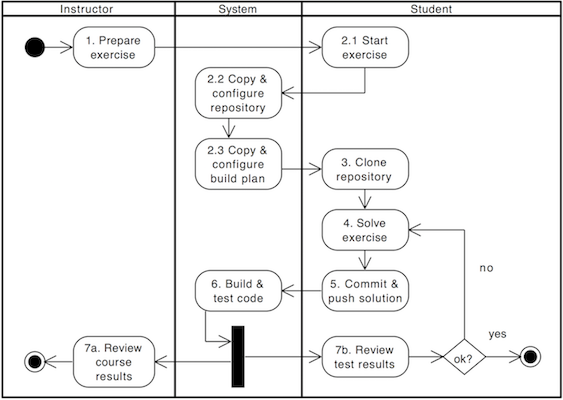

Conducting a programming exercise consists of the following main steps:

1: Instructor prepares exercise: Set up repositories containing the exercise code and test cases, configure build instructions, and set up the exercise in Artemis2-5: Students work on exercise: Students clone repositories, solve exercises, and submit solutions6: Automated testing: The continuous integration server verifies submissions by executing test cases and provides feedback7: Instructor reviews results: Review overall results of all students and react to common errors and problems

The workflow diagram above shows the detailed steps from the student perspective. For a complete step-by-step guide on how students participate in programming exercises, see the Programming Exercise Student Guide.

Artemis supports a wide range of programming languages and is independent of specific version control and continuous integration systems. Instructors have significant freedom in defining the test environment.

Exercise Templates

Artemis supports templates for various programming languages to simplify exercise setup. The availability of features depends on the continuous integration system (Local CI or Jenkins) and the programming language. Instructors can still use those templates to generate programming exercises and then adapt and customize the settings in the repositories and build plans.

Supported Programming Languages

The following table provides an overview of supported programming languages and their corresponding templates:

| No. | Programming Language | Local CI | Jenkins | Build System/Notes | Docker Image |

|---|---|---|---|---|---|

| 1 | Java | ✅ | ✅ | Gradle, Maven, DejaGnu | artemis-maven-docker |

| 2 | Python | ✅ | ✅ | pip | artemis-python-docker |

| 3 | C | ✅ | ✅ | Makefile, FACT, GCC | artemis-c-docker |

| 4 | Haskell | ✅ | ✅ | Stack | artemis-haskell |

| 5 | Kotlin | ✅ | ✅ | Maven | artemis-maven-docker |

| 6 | VHDL | ✅ | ❌ | Makefile | artemis-vhdl-docker |

| 7 | Assembler | ✅ | ❌ | Makefile | artemis-assembler-docker |

| 8 | Swift | ✅ | ✅ | SwiftPM | artemis-swift-swiftlint-docker |

| 9 | OCaml | ✅ | ❌ | Dune | artemis-ocaml-docker |

| 10 | Rust | ✅ | ✅ | cargo | artemis-rust-docker |

| 11 | JavaScript | ✅ | ✅ | npm | artemis-javascript-docker |

| 12 | R | ✅ | ✅ | built-in | artemis-r-docker |

| 13 | C++ | ✅ | ✅ | CMake | artemis-cpp-docker |

| 14 | TypeScript | ✅ | ✅ | npm | artemis-javascript-docker |

| 15 | C# | ✅ | ✅ | dotnet | artemis-csharp-docker |

| 16 | Go | ✅ | ✅ | built-in | artemis-go-docker |

| 17 | Bash | ✅ | ✅ | built-in | artemis-bash-docker |

| 18 | MATLAB | ✅ | ❌ | built-in | matlab |

| 19 | Ruby | ✅ | ✅ | Rake | artemis-ruby-docker |

| 20 | Dart | ✅ | ✅ | built-in | artemis-dart-docker |

Feature Support by Language

Feature Explanations

The features listed in the table above provide different capabilities for programming exercises.

Sequential Test Runs

Artemis can generate a build plan which first executes structural and then behavioral tests. This feature helps students better concentrate on the immediate challenge at hand by running tests in a specific order.

When enabled, structural tests (like checking class structure, method signatures) run first. Only if these pass will behavioral tests (testing actual functionality) execute. This prevents students from being overwhelmed by behavioral test failures when fundamental structural requirements aren't met yet.

Package Name

For some programming languages (primarily Java, Kotlin, Swift, and Go), you can specify a package name that will be used in the exercise template. This defines the package structure students must use in their code.

The package name is configured during exercise creation and helps maintain consistent code organization across student submissions.

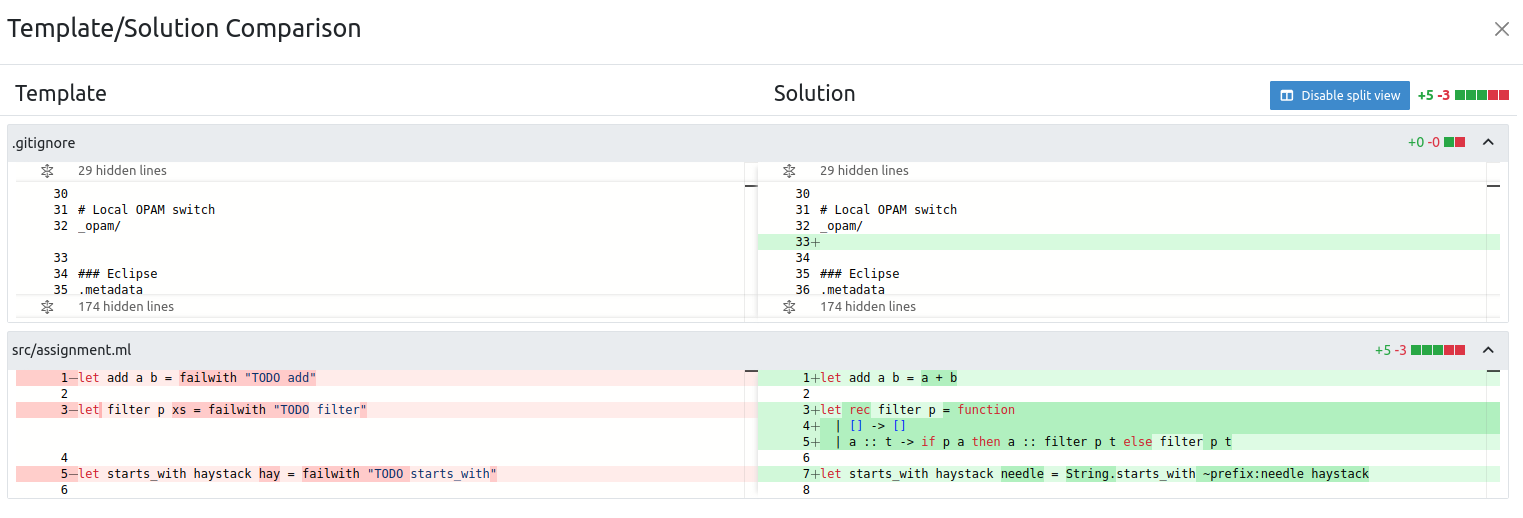

Solution Repository Checkout

This feature allows instructors to compare a student submission against the sample solution in the solution repository. When available, instructors can view differences between student code and the reference solution side-by-side, making it easier to identify where students deviate from the expected approach.

Note: This feature is currently only supported for OCaml and Haskell exercises.

Auxiliary Repositories

Auxiliary repositories are additional repositories beyond the standard template, solution, and test repositories. They can be used to:

- Provide additional resources needed during testing

- Include libraries or dependencies

- Overwrite template source code in testing scenarios

Each auxiliary repository has a name, checkout directory, and description. They are created during exercise setup and added to the build plan automatically. For more details, see the Auxiliary Repositories configuration section in Exercise Creation.

Feature Support by Language Matrix

Not all templates support the same feature set. The table below provides an overview of supported features for each language:

L = Local CI, J = Jenkins

| No. | Language | Sequential Test Runs | Static Code Analysis | Plagiarism Check | Package Name | Project Type | Solution Repository Checkout | Auxiliary Repositories |

|---|---|---|---|---|---|---|---|---|

| 1 | Java | ✅ | ✅ | ✅ | ✅ | Gradle, Maven, DejaGnu | ❌ | L: ✅ J: ❌ |

| 2 | Python | L: ✅ J: ❌ | L: ✅ J: ❌ | ✅ | ❌ | n/a | ❌ | L: ✅ J: ❌ |

| 3 | C | ❌ | L: ✅ J: ❌ | ✅ | ❌ | FACT, GCC | ❌ | L: ✅ J: ❌ |

| 4 | Haskell | L: ✅ J: ❌ | ❌ | ❌ | ❌ | n/a | L: ✅ J: ❌ | L: ✅ J: ❌ |

| 5 | Kotlin | ✅ | ❌ | ✅ | ✅ | n/a | ❌ | L: ✅ J: ❌ |

| 6 | VHDL | ❌ | ❌ | ❌ | ❌ | n/a | ❌ | L: ✅ J: ❌ |

| 7 | Assembler | ❌ | ❌ | ❌ | ❌ | n/a | ❌ | L: ✅ J: ❌ |

| 8 | Swift | ❌ | ✅ | ✅ | ✅ | Plain | ❌ | L: ✅ J: ❌ |

| 9 | OCaml | ❌ | ❌ | ❌ | ❌ | n/a | ✅ | L: ✅ J: ❌ |

| 10 | Rust | ❌ | L: ✅ J: ❌ | ✅ | ❌ | n/a | ❌ | L: ✅ J: ❌ |

| 11 | JavaScript | ❌ | L: ✅ J: ❌ | ✅ | ❌ | n/a | ❌ | L: ✅ J: ❌ |

| 12 | R | ❌ | L: ✅ J: ❌ | ✅ | ❌ | n/a | ❌ | L: ✅ J: ❌ |

| 13 | C++ | ❌ | L: ✅ J: ❌ | ✅ | ❌ | n/a | ❌ | L: ✅ J: ❌ |

| 14 | TypeScript | ❌ | L: ✅ J: ❌ | ✅ | ❌ | n/a | ❌ | L: ✅ J: ❌ |

| 15 | C# | ❌ | ❌ | ✅ | ❌ | n/a | ❌ | L: ✅ J: ❌ |

| 16 | Go | ❌ | ❌ | ✅ | ✅ | n/a | ❌ | L: ✅ J: ❌ |

| 17 | Bash | ❌ | ❌ | ❌ | ❌ | n/a | ❌ | L: ✅ J: ❌ |

| 18 | MATLAB | ❌ | ❌ | ❌ | ❌ | n/a | ❌ | L: ✅ J: ❌ |

| 19 | Ruby | ❌ | L: ✅ J: ❌ | ❌ | ❌ | n/a | ❌ | L: ✅ J: ❌ |

| 20 | Dart | ❌ | L: ✅ J: ❌ | ❌ | ❌ | n/a | ❌ | L: ✅ J: ❌ |

Note: Only some templates for Local CI support Sequential Test Runs. Static Code Analysis for some exercises is also only supported for Local CI. Instructors can still extend the generated programming exercises with additional features that are not available in a specific template.

We encourage instructors to contribute improvements to the existing templates or to provide new templates. Please contact Stephan Krusche and/or create Pull Requests in the GitHub repository.

Exercise Creation

Creating a programming exercise consists of the following steps:

- Generate programming exercise in Artemis

- Update exercise code in the template, solution, and test repositories

- Adapt the build script (optional)

- Configure static code analysis (optional)

- Adapt the interactive problem statement

- Configure grading settings

- Verify the exercise configuration

Generate Programming Exercise

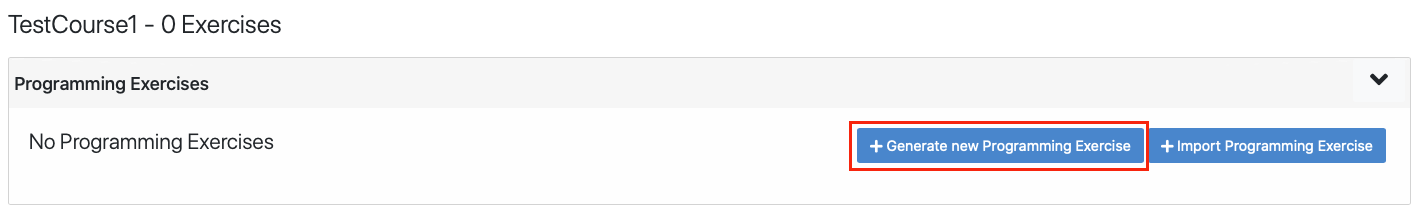

Open and navigate to Exercises of your preferred course.

Click on Generate new programming exercise.

The guided mode has been removed. Artemis now provides a validation bar to navigate through sections and help validate the form. Watch the screencast below for more information.

Artemis provides various options to customize programming exercises:

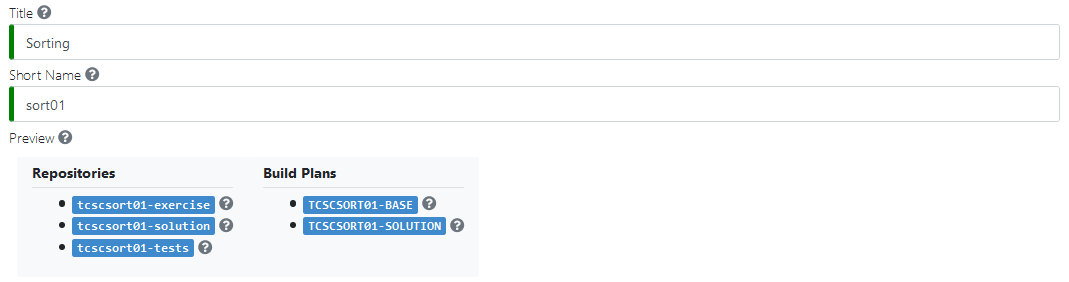

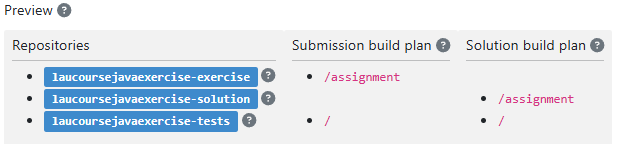

Naming Section

- Title: The title of the exercise. Used to create a project on the VCS server. Can be changed after creation

- Short Name: Together with the course short name, this creates a unique identifier for the exercise across Artemis (including repositories and build plans). Cannot be changed after creation

- Preview: Shows the generated repository and build plan names based on the short names

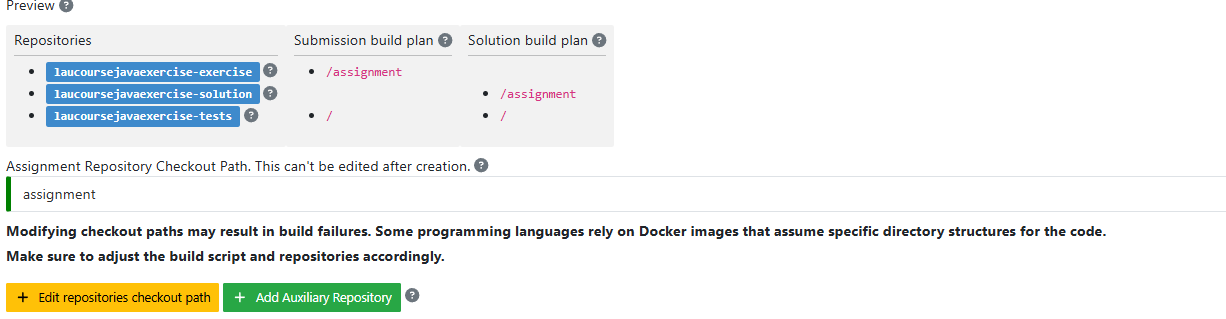

Auxiliary Repositories

- Auxiliary Repositories: Additional repositories with a name, checkout directory, and description. Created and added to the build plan when the exercise is created. Cannot be changed after exercise creation

Auxiliary repositories are checked out to the specified directory during automatic testing if a checkout directory is set. This can be used for providing additional resources or overwriting template source code in testing exercises.

Categories

- Categories: Define up to two categories per exercise. Categories are visible to students and should be used consistently to group similar exercises

Participation Mode and Options

- Difficulty: Information about the difficulty level for students

- Participation Mode: Whether students work individually or in teams. Cannot be changed after creation. Learn more about team exercises

- Team Size: For team mode, provide recommended team size. Instructors/Tutors define actual teams after exercise creation

- Allow Offline IDE: Allow students to clone their repository and work with their preferred IDE

- Allow Online Editor: Allow students to use the Artemis Online Code Editor

- Publish Build Plan: Allow students to access and edit their build plan. Useful for exercises where students configure parts of the build plan themselves

At least one of Allow Offline IDE or Allow Online Editor must be active.

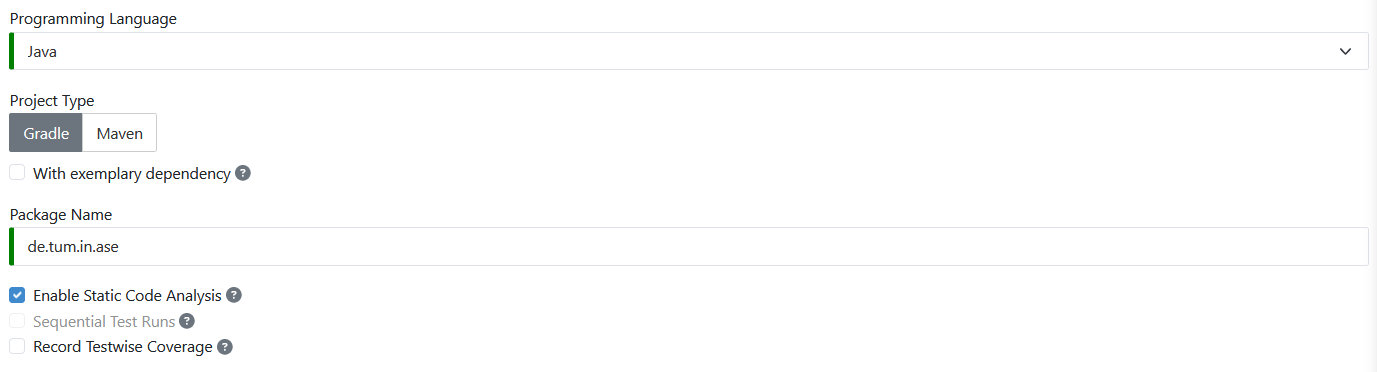

Programming Language and Project Settings

- Programming Language: The programming language for the exercise. Artemis chooses the template accordingly

- Project Type: Determines the project structure of the template. Not available for all languages

- With exemplary dependency: (Java only) Adds an external Apache commons-lang dependency as an example

- Package Name: The package name for this exercise. Not available for all languages

- Enable Static Code Analysis: Enable automated code quality checks. Cannot be changed after creation. See Static Code Analysis Configuration section below

- Sequential Test Runs: First run structural, then behavioral tests. Helps students focus on immediate challenges. Not compatible with static code analysis. Cannot be changed after creation

- Customize Build Plan: Customize the build plan of your exercise. Available for all languages with Local CI and Jenkins. Can also be customized after creation

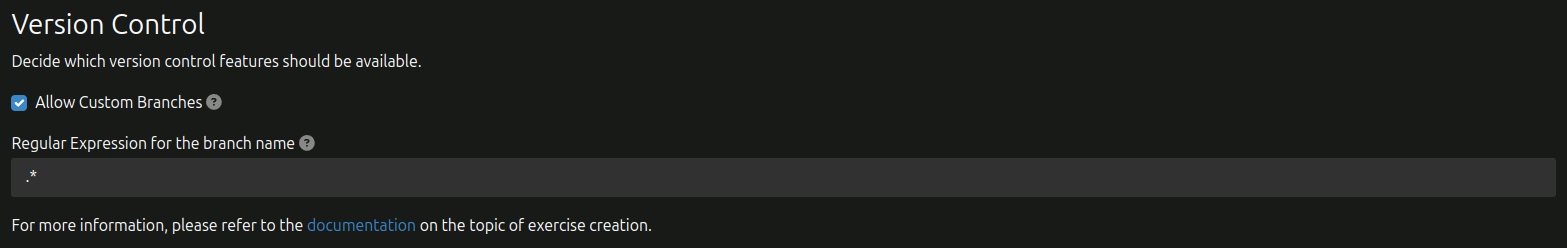

Version Control Settings

- Allow Custom Branches: Allow students to push to branches other than the default one

Artemis does not show custom branches in the UI and offers no merge support. Pushing to non-default branches does not trigger builds or create submissions. Students are fully responsible for managing their branches. Only activate if absolutely necessary!

- Regular Expression for branch name: Custom branch names are matched against this Java regular expression. Pushing is only allowed if it matches

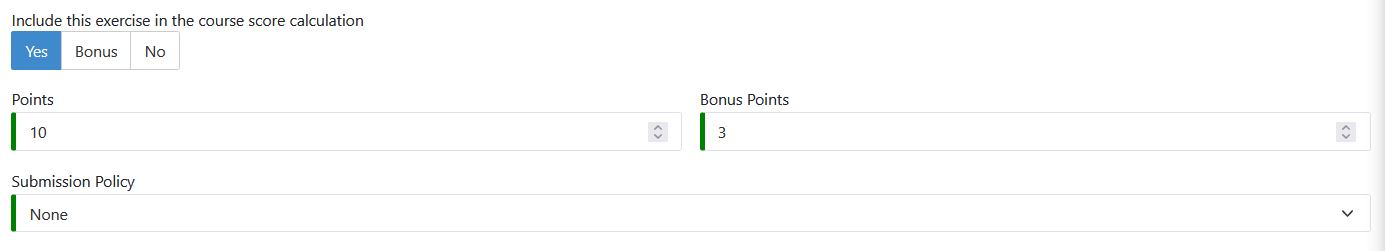

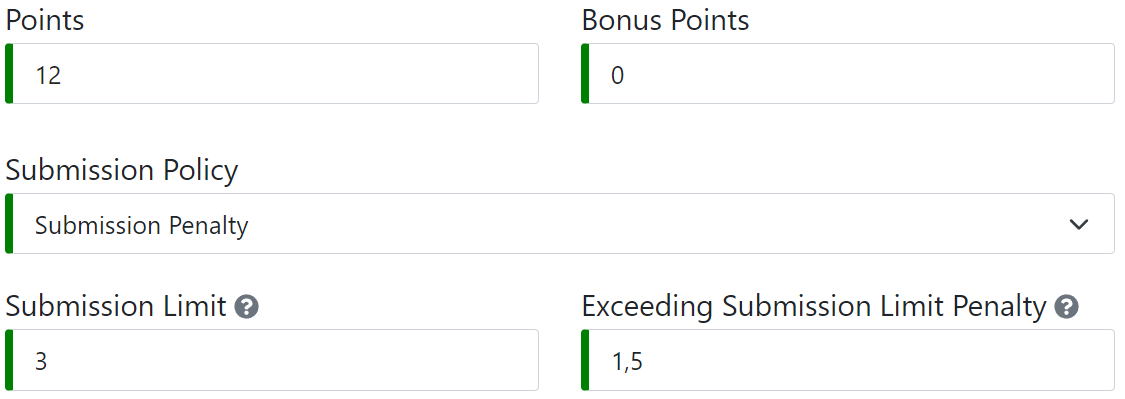

Score Configuration

-

Should this exercise be included in the course/exam score calculation?

- Yes: Define maximum Points and Bonus points. Total points count toward course/exam score

- Bonus: Achieved Points count as bonus toward course/exam score

- No: Achieved Points do not count toward course/exam score

-

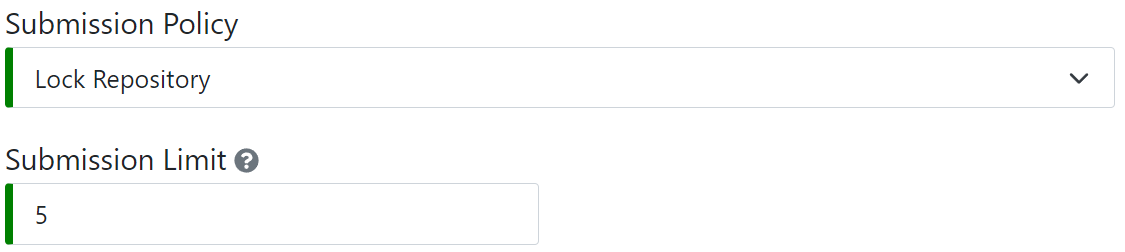

Submission Policy: Configure initial submission policy. Choose between:

- None: Unlimited submissions

- Lock Repository: Limit submissions; lock repository when limit is reached

- Submission Penalty: Unlimited submissions with point deductions after limit

See Submission Policy Configuration section for details.

Submission policies can only be edited on the Grading Page after initial exercise generation.

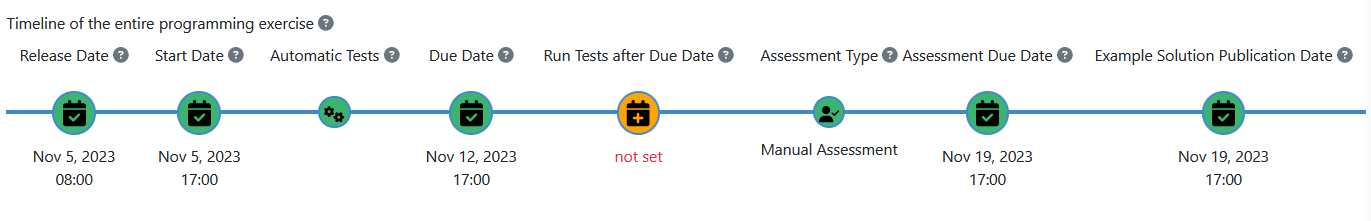

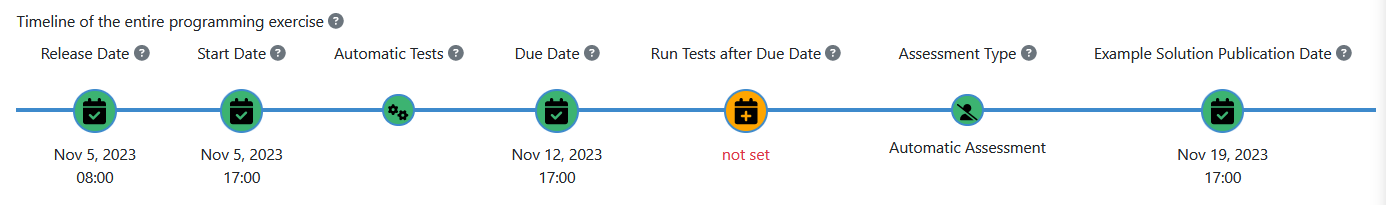

Timeline Configuration

- Release Date: When the exercise becomes visible to students

- Start Date: When students can start participating. If not set, students can participate immediately after release

- Automatic Tests: Every commit triggers test execution (except tests specified to run after due date)

- Due Date: Deadline for the exercise. Commits after this date are not graded

- Run Tests after Due Date: Build and test the latest in-time submission of each student on this date. Must be after due date. Use for executing hidden tests

- Assessment Type: Choose between Automatic Assessment or Manual Assessment. For manual assessment, tutors review submissions

- Assessment Due Date: Deadline for manual reviews. All assessments are released to students on this date

- Example Solution Publication Date: When the solution repository becomes available for students. If blank, never published

Assessment Options

- Complaint on Automatic Assessment: Allow students to complain about automatic assessment after due date. Only available if complaints are enabled in the course or for exam exercises

Using practice mode, students can still commit code and receive feedback after the due date. These results are not rated.

- Manual feedback requests: Enable manual feedback requests, allowing students to request feedback before the deadline. Each student can make one request at a time

- Show Test Names to Students: Show names of automated test cases to students. If disabled, students cannot differentiate between automatic and manual feedback

- Include tests into example solution: Include test cases in the example solution so students can run tests locally

Static Code Analysis Configuration

- Max Static Code Analysis Penalty: Available if static code analysis is active. Maximum points that can be deducted for code quality issues as a percentage (0-100%) of Points. Defaults to 100% if empty

Example: Given an exercise with 10 Points and Max Static Code Analysis Penalty of 20%, at most 2 points will be deducted for code quality issues.

Problem Statement and Instructions

- Problem Statement: The exercise description shown to students. See Adapt Interactive Problem Statement section

- Grading Instructions: Available for Manual Assessment. Create instructions for tutors during manual assessment

Click to create the exercise.

Result: Programming Exercise Created

Artemis creates the following repositories:

- Template: Template code that all students receive at the start. Can be empty

- Test: Contains all test cases (e.g., JUnit-based) and optionally static code analysis configuration. Hidden from students

- Solution: Solution code, typically hidden from students, can be made available after the exercise

Artemis creates two build plans:

- Template (BASE): Basic configuration for template + test repository. Used to create student build plans

- Solution (SOLUTION): Configuration for solution + test repository. Used to verify exercise configuration

Update Exercise Code in Repositories

You have two alternatives to update the exercise code:

Alternative 1: Clone and Edit Locally

- Clone the 3 repositories and adapt the code on your local computer in your preferred IDE

- To execute tests locally:

- Copy template (or solution) code into an assignment folder (location depends on language)

- Execute tests (e.g., using

mvn clean testfor Java)

- Commit and push your changes via Git

Special Notes for Haskell

The build file expects the solution repository in the solution subdirectory and allows a template subdirectory for easy local testing.

Convenient checkout script:

#!/bin/sh

# Arguments:

# $1: exercise short name

# $2: (optional) output folder name

if [ -z "$1" ]; then

echo "No exercise short name supplied."

exit 1

fi

EXERCISE="$1"

NAME="${2:-$1}"

# Adapt BASE to your repository URL

BASE="ssh://git@artemis.tum.de:7999/$EXERCISE/$EXERCISE"

git clone "$BASE-tests.git" "$NAME" && \

git clone "$BASE-exercise.git" "$NAME/template" && \

git clone "$BASE-solution.git" "$NAME/solution" && \

cp -R "$NAME/template" "$NAME/assignment" && \

rm -r "$NAME/assignment/.git/"

Special Notes for OCaml

Tests expect to be in a tests folder next to assignment and solution folders.

Convenient checkout script:

#!/bin/sh

# Arguments:

# $1: exercise short name

# $2: (optional) output folder name

PREFIX= # Set your course prefix

if [ -z "$1" ]; then

echo "No exercise short name supplied."

exit 1

fi

EXERCISE="$PREFIX$1"

NAME="${2:-$1}"

BASE="ssh://git@artemis.tum.de:7999/$EXERCISE/$EXERCISE"

git clone "$BASE-tests.git" "$NAME/tests"

git clone "$BASE-exercise.git" "$NAME/template"

git clone "$BASE-solution.git" "$NAME/solution"

# Hardlink assignment interfaces

rm "$NAME/template/src/assignment.mli"

rm "$NAME/tests/assignment/assignment.mli"

rm "$NAME/tests/solution/solution.mli"

ln "$NAME/solution/src/assignment.mli" "$NAME/template/src/assignment.mli"

ln "$NAME/solution/src/assignment.mli" "$NAME/tests/assignment/assignment.mli"

ln "$NAME/solution/src/assignment.mli" "$NAME/tests/solution/solution.mli"

Test script:

#!/bin/sh

dir="$(realpath ./)"

cd .. || exit 1

rm ./assignment

ln -s "$dir" ./assignment

cd tests || exit 1

./run.sh

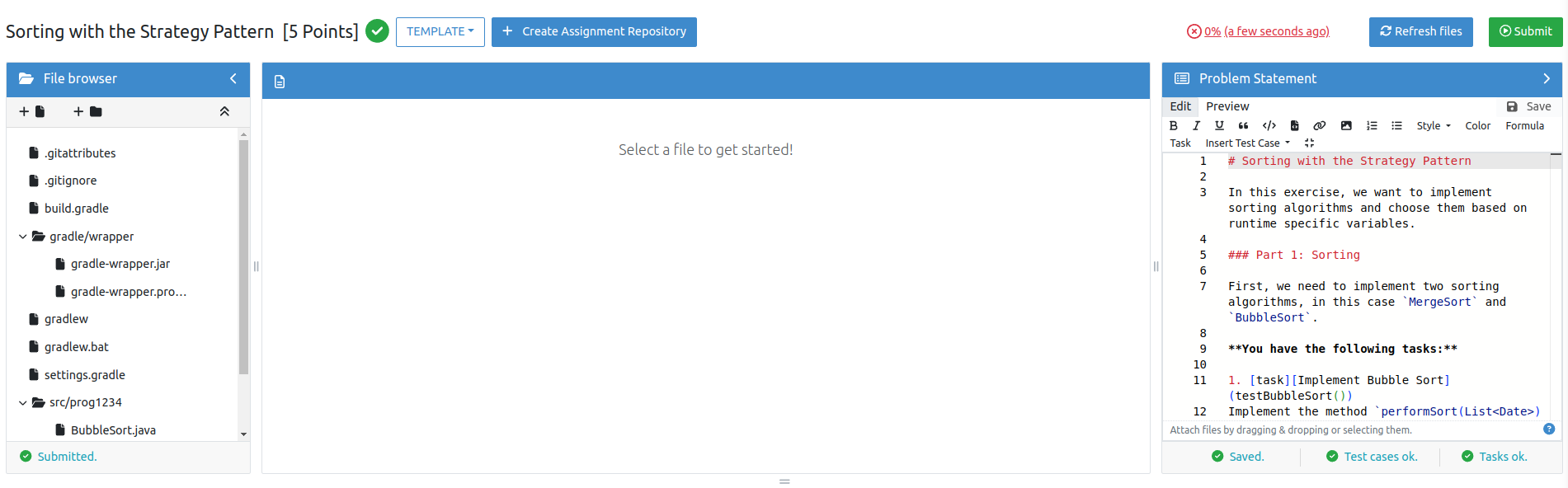

Alternative 2: Edit in Browser

Open in Artemis and adapt the code in the online editor.

You can switch between different repositories and the code when done.

Testing Frameworks by Language

Write test cases in the Test repository using language-specific frameworks:

| No. | Language | Package Manager | Build System | Testing Framework |

|---|---|---|---|---|

| 1 | Java | Maven / Gradle | Maven / Gradle | JUnit 5 with Ares |

| 2 | Python | pip | pip | pytest |

| 3 | C | - | Makefile | Python scripts / FACT |

| 4 | Haskell | Stack | Stack | Tasty |

| 5 | Kotlin | Maven | Maven | JUnit 5 with Ares |

| 6 | VHDL | - | Makefile | Python scripts |

| 7 | Assembler | - | Makefile | Python scripts |

| 8 | Swift | SwiftPM | SwiftPM | XCTest |

| 9 | OCaml | opam | Dune | OUnit2 |

| 10 | Rust | cargo | cargo | cargo test |

| 11 | JavaScript | npm | npm | Jest |

| 12 | R | built-in | - | testthat |

| 13 | C++ | - | CMake | Catch2 |

| 14 | TypeScript | npm | npm | Jest |

| 15 | C# | NuGet | dotnet | NUnit |

| 16 | Go | built-in | built-in | go testing |

| 17 | Bash | - | - | Bats |

| 18 | MATLAB | mpminstall | - | matlab.unittest |

| 19 | Ruby | Gem | Rake | minitest |

| 20 | Dart | pub | built-in | package.test |

Check the build plan results:

- Template and solution build plans should not have Build Failed status

- If the build fails, check build errors in the build plan

Hint: Test cases should only reference code available in the template repository. If this is not possible, try the Sequential Test Runs option.

Adapt Build Script (Optional)

This section is optional. The preconfigured build script usually does not need changes.

If you need additional build steps or different configurations, activate Customize Build Script in the exercise create/edit/import screen. All changes apply to all builds (template, solution, student submissions).

Predefined build scripts in bash exist for all languages, project types, and configurations. Most languages clone the test repository into the root folder and the assignment repository into the assignment folder.

You can also use a custom Docker image. Make sure to:

- Publish the image in a publicly available repository (e.g., DockerHub)

- Build for both amd64 and arm64 architectures

- Keep the image size small (build agents download before execution)

- Include all build dependencies to avoid downloading in every build

Test custom exercises locally before publishing Docker images and uploading to Artemis for better development experience.

Edit Repositories Checkout Paths (Optional)

Only available with Integrated Code Lifecycle

Preconfigured checkout paths usually don't need changes.

Checkout paths depend on the programming language and project type:

To change checkout paths, click edit repositories checkout path:

Update the build script accordingly (see the Adapt Build Script section).

Important:

- Checkout paths can only be changed during exercise creation, not after

- Depending on language/project type, some paths may be predefined and unchangeable

- Changing paths can cause build errors if build script is not adapted

- For C exercises with default Docker image, changing paths will cause build errors

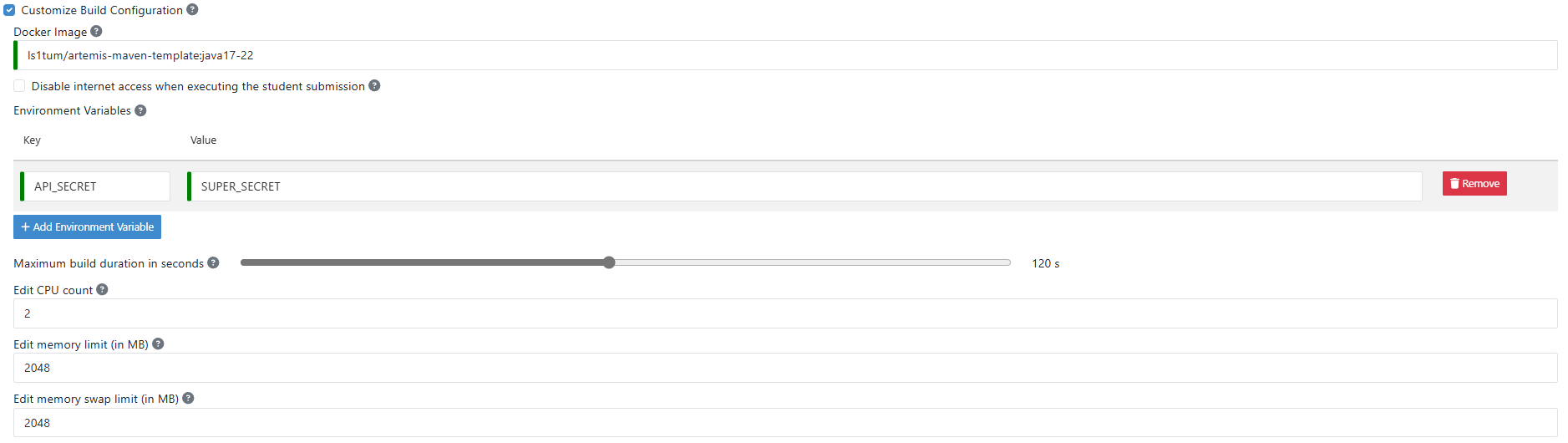

Edit Maximum Build Duration (Optional)

Only available with Integrated Code Lifecycle

The default maximum build duration (120 seconds) usually doesn't need changes.

Use the slider to adjust the time limit for build plan execution:

Edit Container Configuration (Optional)

Only available with Integrated Code Lifecycle

In most cases, the default container configuration does not need to be changed.

Currently, instructors can change whether the container has internet access, add additional environment variables, and configure resource limits such as CPU and memory.

Disabling internet access can be useful if instructors want to prevent students from downloading additional dependencies during the build process. If internet access is disabled, the container cannot access the internet during the build process. Thus, it will not be able to download additional dependencies. The dependencies must then be included/cached in the Docker image.

Additional environment variables can be added to the container configuration. This can be useful if the build process requires specific variables to be set.

Instructors can also adjust resource limits for the container. The number of CPU cores allocated to the container can be modified, as well as the maximum amount of memory and memory swap that the container is allowed to use. These settings help ensure that resource usage is balanced while allowing for flexibility in configuring the build environment. If set too high, the specified values may be overwritten by the maximum restrictions set by the administrators. Contact the administrators for more information.

We plan to add more options to the container configuration in the future.

Configure Static Code Analysis

If static code analysis was activated, the Test repository contains configuration files.

For Java exercises, the staticCodeAnalysisConfig folder contains configuration files for each tool. Artemis generates default configurations with predefined rules. Instructors can freely customize these files.

On exercise import, configurations are copied from the imported exercise.

Supported Static Code Analysis Tools

| No. | Language | Supported Tools | Configuration File |

|---|---|---|---|

| 1 | Java | Spotbugs | spotbugs-exclusions.xml |

| Checkstyle | checkstyle-configuration.xml | ||

| PMD | pmd-configuration.xml | ||

| PMD CPD | (via PMD plugin) | ||

| 2 | Python | Ruff | ruff-student.toml |

| 3 | C | GCC | (via compiler flags) |

| 8 | Swift | SwiftLint | .swiftlint.yml |

| 10 | Rust | Clippy | clippy.toml |

| 11/14 | JavaScript/TypeScript | ESLint | eslint.config.mjs |

| 12 | R | lintr | .lintr |

| 13 | C++ | Clang-Tidy | .clang-tidy |

| 19 | Ruby | Rubocop | .rubocop.yml |

| 20 | Dart | dart analyze | analysis_options.yaml |

Maven plugins for Java static code analysis tools provide additional configuration options. GCC can be configured by passing flags in the tasks. See GCC Documentation.

Instructors can completely disable specific tools by removing the plugin/dependency from the build file (pom.xml or build.gradle) or by altering the task/script that executes the tools in the build plan.

Special case: PMD and PMD CPD share a common plugin. To disable one or the other, instructors must delete the execution of the specific tool from the build plan.

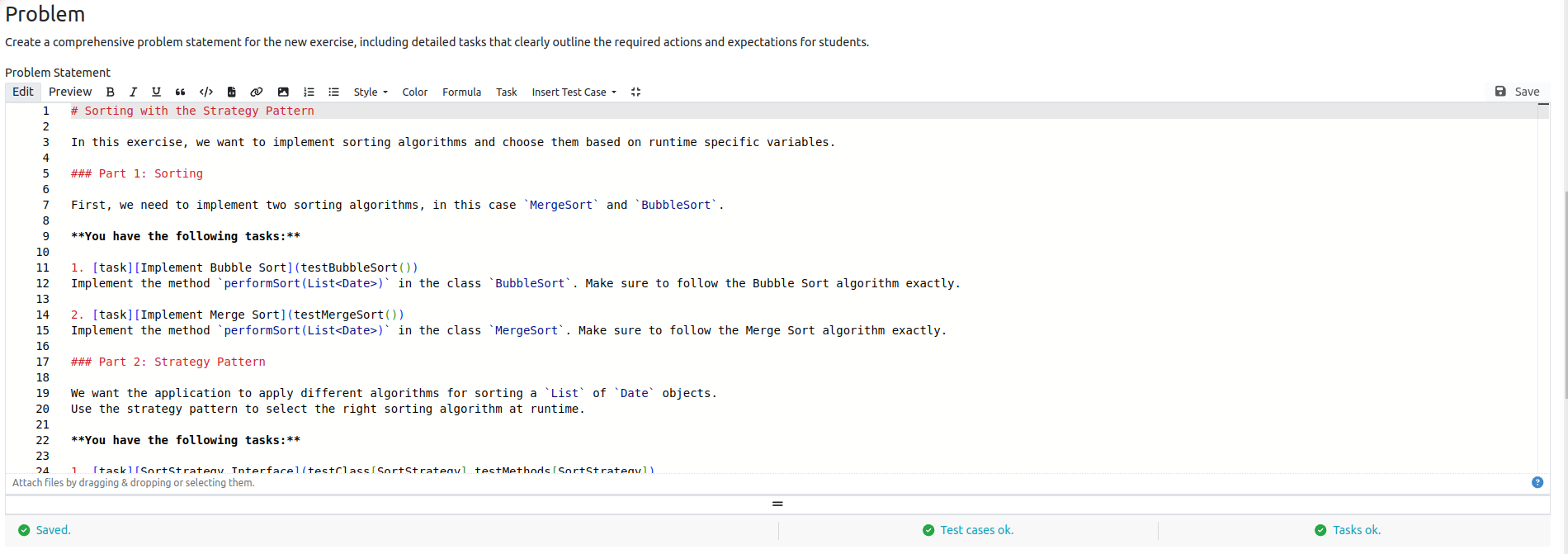

Adapt Interactive Problem Statement

Click on the programming exercise or navigate to

and adapt the interactive problem statement.

The initial example shows how to:

- Integrate tasks

- Link tests

- Integrate interactive UML diagrams

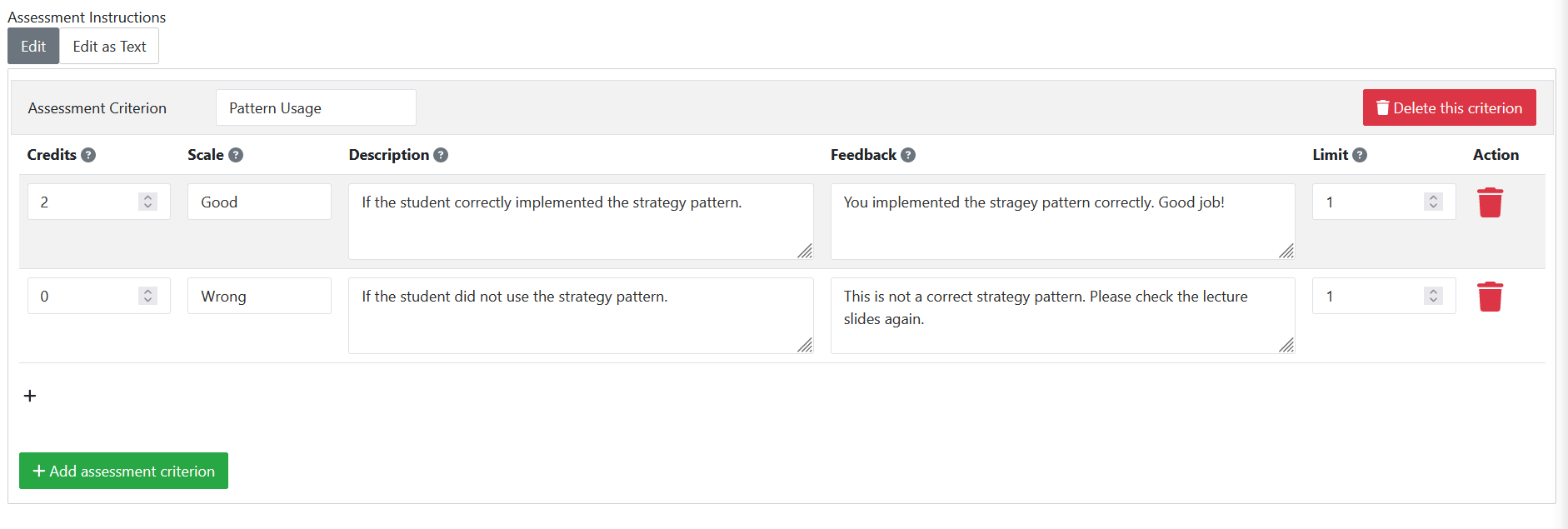

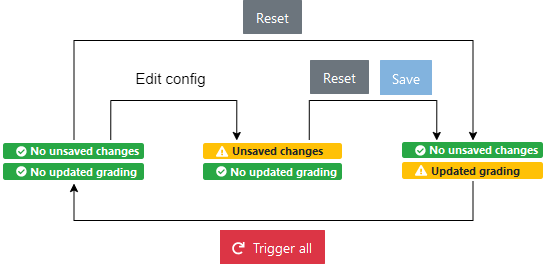

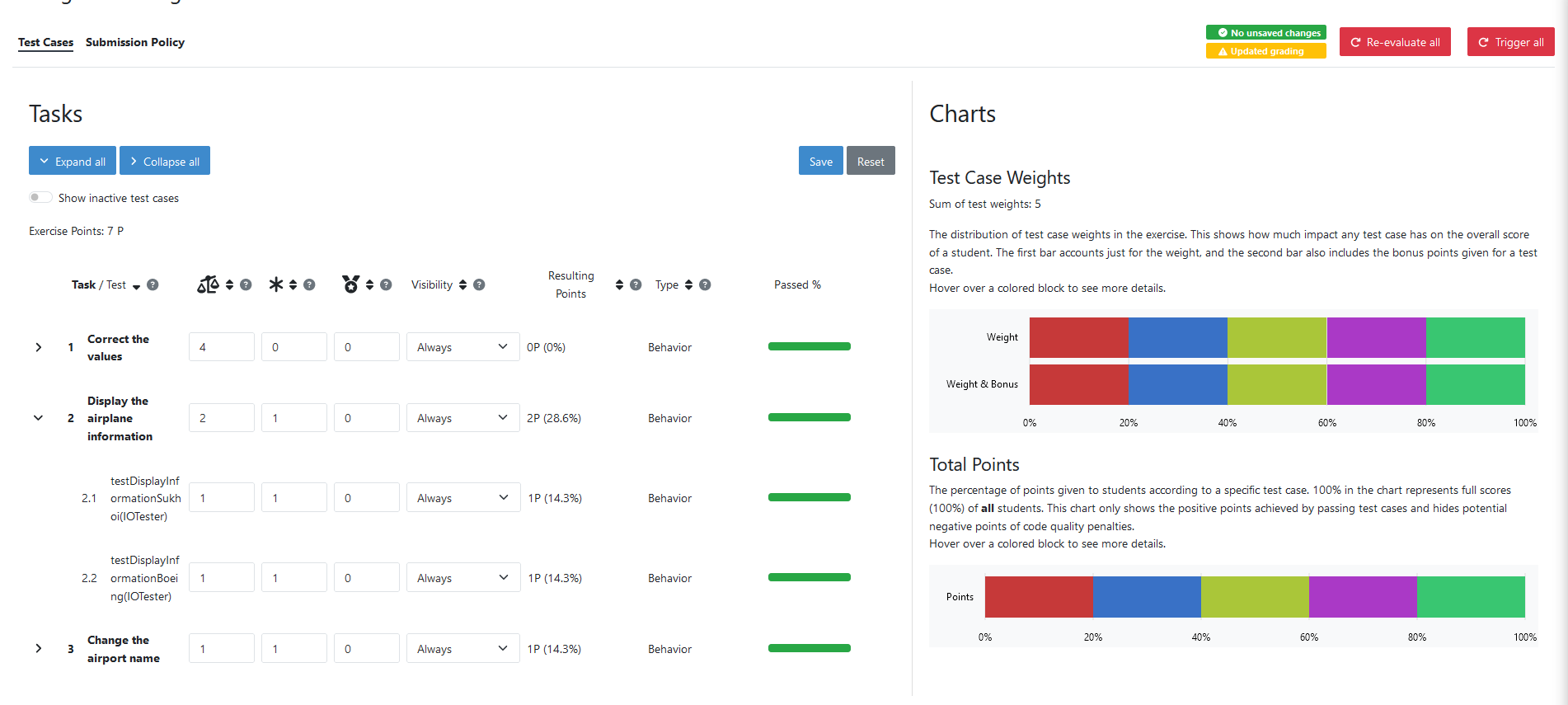

Configure Grading

The grading configuration determines how test cases contribute to the overall score and allows configuration of code quality issue penalties.

General Actions

Save: Save current grading configuration

Reset: Reset the current grading configuration of the open tab to the default values. For Test Case Tab, all test cases are set to weight 1, bonus multiplier 1, and bonus points 0. For the Code Analysis Tab, the default configuration depends on the selected programming language.

Re-evaluate All: Re-evaluate scores using currently saved settings and existing feedback

Trigger All: Trigger all build plans to create new results with updated configuration

Artemis always grades new submissions with the latest configuration, but existing submissions might use outdated configuration. Artemis warns about grading inconsistencies with the Updated grading badge.

Test Case Configuration

Adapt test case contribution to the overall score or set grading based on tasks.

Artemis registers tasks and test cases from the Test repository using results from the Solution build plan. Test cases only appear after the first Solution build plan execution.

If your problem statement does not contain tasks, task-based grading is not available. You can still configure grading based on individual test cases.

Left side configuration options:

- Task/Test Name: Task names (bold) are from the problem statement. Test names are from the Test repository

- Weight: Points for a test are proportional to weight (sum of weights as denominator). For tasks, weight is evenly distributed across test cases

- Bonus multiplier: Multiply points for passing a test without affecting other test points. For tasks, multiplier applies to all contained tests

- Bonus points: Add flat point bonus for passing a test. For tasks, bonus points are evenly distributed across test cases

- Visibility: Control feedback visibility:

- Always: Feedback visible immediately after grading

- After Due Date: Feedback visible only after due date (or individual due dates)

- Never: Feedback never visible to students. Not considered in score calculation

- Passed %: Statistics showing percentage of students passing/failing the test

Bonus points (score > 100%) are only achievable if at least one bonus multiplier > 1 or bonus points are given for a test case.

For manual assessments, all feedback details are visible to students even if the due date hasn't passed for others. Set an appropriate assessment due date to prevent information leakage.

Examples:

Example 1: Given an exercise with 3 test cases, maximum points of 10 and 10 achievable bonus points. The highest achievable score is 200%. Test Case (TC) A has weight 2, TC B and TC C have weight 1 (bonus multipliers 1 and bonus points 0 for all test cases). A student that only passes TC A will receive 50% of the maximum points (5 points).

Example 2: Given the configuration of Example 1 with an additional bonus multiplier of 2 for TC A. Passing TC A accounts for (2 × 2) / (2 + 1 + 1) × 100 = 100% of the maximum points (10). Passing TC B or TC C accounts for 1/4 × 100 = 25% of the maximum points (2.5). If the student passes all test cases he will receive a score of 150%, which amounts to 10 points and 5 bonus points.

Example 3: Given the configuration of Example 2 with additional bonus points of 5 for TC B. The points achieved for passing TC A and TC C do not change. Passing TC B now accounts for 2.5 points plus 5 bonus points (7.5). If the student passes all test cases he will receive 10 (TC A) + 7.5 (TC B) + 2.5 (TC C) points, which amounts to 10 points and 10 bonus points and a score of 200%.

The right side displays statistics:

- Weight Distribution: Impact of each test on score

- Total Points: Percentage of points awarded per test case across all students

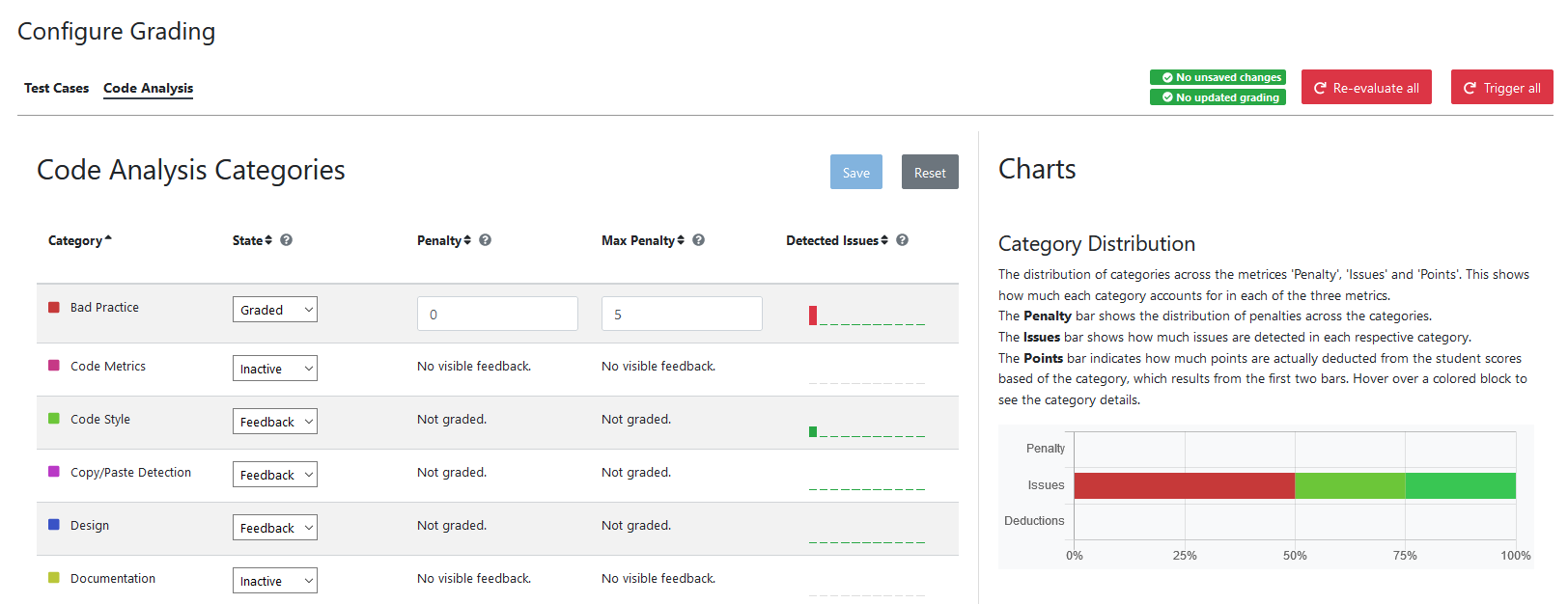

Code Analysis Configuration

Configure visibility and grading of code quality issues by category.

The Code Analysis tab is only available if static code analysis is enabled for the exercise.

Issues are grouped into categories. The following table shows the category mappings for Java, Swift, and C:

| Category | Description | Java | Swift | C |

|---|---|---|---|---|

| Bad Practice | Code that violates recommended and essential coding practices | Spotbugs BAD_PRACTICE Spotbugs I18N PMD Best Practices | GCC BadPractice | |

| Code Style | Code that is confusing and hard to maintain | Spotbugs STYLE Checkstyle blocks Checkstyle coding Checkstyle modifier PMD Code Style | Swiftlint (all rules) | |

| Potential Bugs | Coding mistakes, error-prone code or threading errors | Spotbugs CORRECTNESS Spotbugs MT_CORRECTNESS PMD Error Prone PMD Multithreading | GCC Memory | |

| Duplicated Code | Code clones | PMD CPD | ||

| Security | Vulnerable code, unchecked inputs and security flaws | Spotbugs MALICIOUS_CODE Spotbugs SECURITY PMD Security | GCC Security | |

| Performance | Inefficient code | Spotbugs PERFORMANCE PMD Performance | ||

| Design | Program structure/architecture and object design | Checkstyle design PMD Design | ||

| Code Metrics | Violations of code complexity metrics or size limitations | Checkstyle metrics Checkstyle sizes | ||

| Documentation | Code with missing or flawed documentation | Checkstyle javadoc Checkstyle annotation PMD Documentation | ||

| Naming & Format | Rules that ensure the readability of the source code (name conventions, imports, indentation, annotations, white spaces) | Checkstyle imports Checkstyle indentation Checkstyle naming Checkstyle whitespace | ||

| Miscellaneous | Uncategorized rules | Checkstyle miscellaneous | GCC Misc |

For Swift, only the category Code Style can contain code quality issues currently. All other categories displayed on the grading page are dummies.

The GCC SCA option for C does not offer categories by default. The issues were categorized during parsing with respect to the rules. For details on the default configuration and active rules, see the GCC - Static Code Analysis Default Configuration section below.

Other languages use categories defined by their static code analysis tool:

- JavaScript, TypeScript, C++, R: Only use the

Lintcategory - Dart: Dart's analyzer can generate these categories:

TODOHINTCOMPILE_TIME_ERRORCHECKED_MODE_COMPILE_TIME_ERRORSTATIC_WARNINGSYNTACTIC_ERRORLINT

- Python: Uses Ruff's tool names

- Ruby: Uses Rubocop's Department names

- Rust: Uses Clippy's lint groups

On the left side of the page, instructors can configure the static code analysis categories:

- Category: The name of the category defined by Artemis

- State:

INACTIVE: Code quality issues of an inactive category are not shown to students and do not influence the score calculationFEEDBACK: Code quality issues of a feedback category are shown to students but do not influence the score calculationGRADED: Code quality issues of a graded category are shown to students and deduct points according to the Penalty and Max Penalty configuration

- Penalty: Artemis deducts the selected amount of points for each code quality issue from points achieved by passing test cases

- Max Penalty: Limits the amount of points deducted for code quality issues belonging to this category

- Detected Issues: Visualizes how many students encountered a specific number of issues in this category

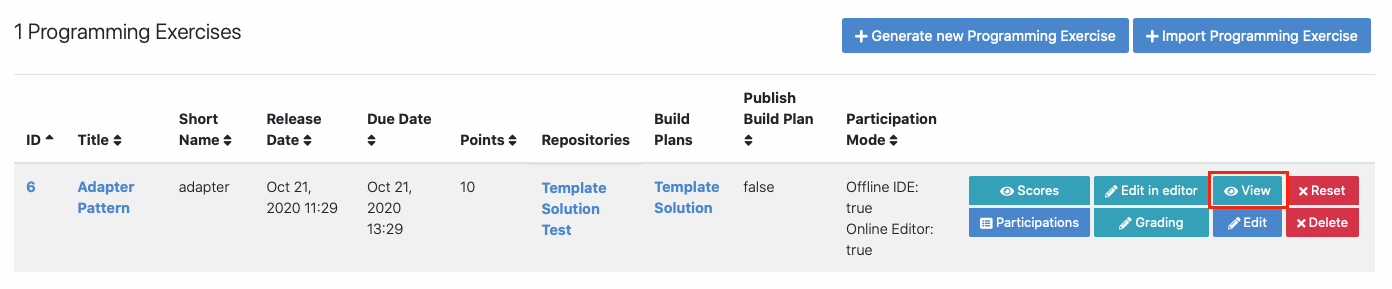

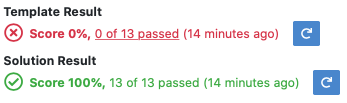

Verify Exercise Configuration

Open the page of the programming exercise.

Verify:

- Template result: Score 0% with "0 of X passed" (or "0 of X passed, 0 issues" with SCA)

- Solution result: Score 100% with "X of X passed" (or "X of X passed, 0 issues" with SCA)

If static code analysis finds issues in template/solution, improve the code or disable the problematic rule.

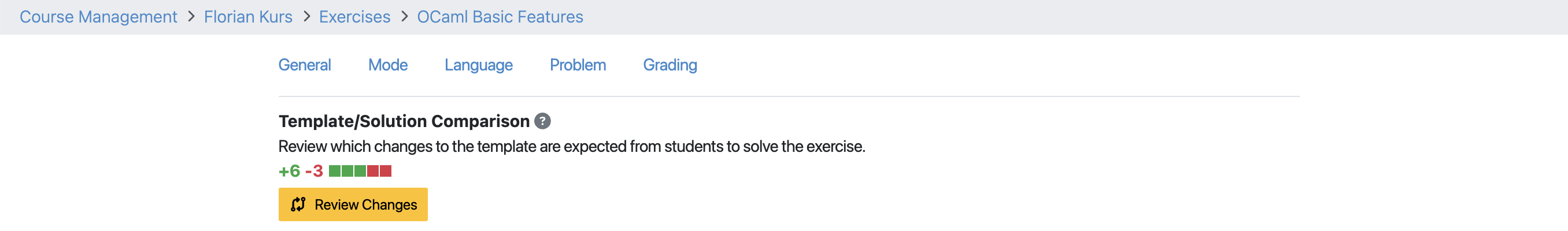

Review Template/Solution Differences

Review differences between template and solution repositories to verify expected student changes.

Click the  button to open the comparison view.

button to open the comparison view.

Verify Problem Statement Integration

Click . Below the problem statement, verify:

- Test cases: OK

- Hints: OK

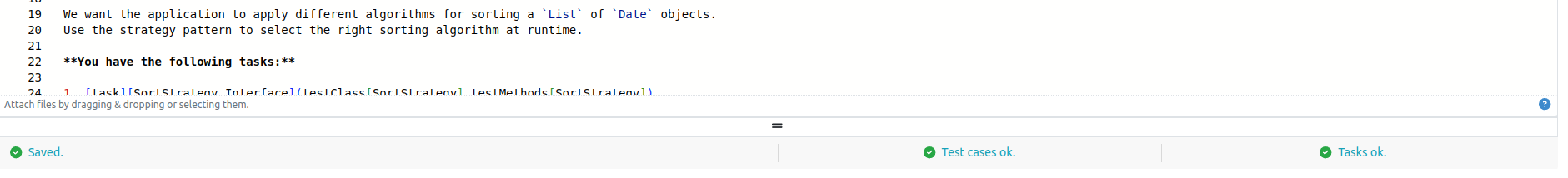

Feedback Analysis

After verifying the exercise configuration, the Feedback Analysis feature helps identify common student mistakes and improve grading efficiency.

Accessing Feedback Analysis:

- Navigate to Exercise Management

- Open the programming exercise

- Go to the grading section

- Click the Feedback Analysis tab

Key Features:

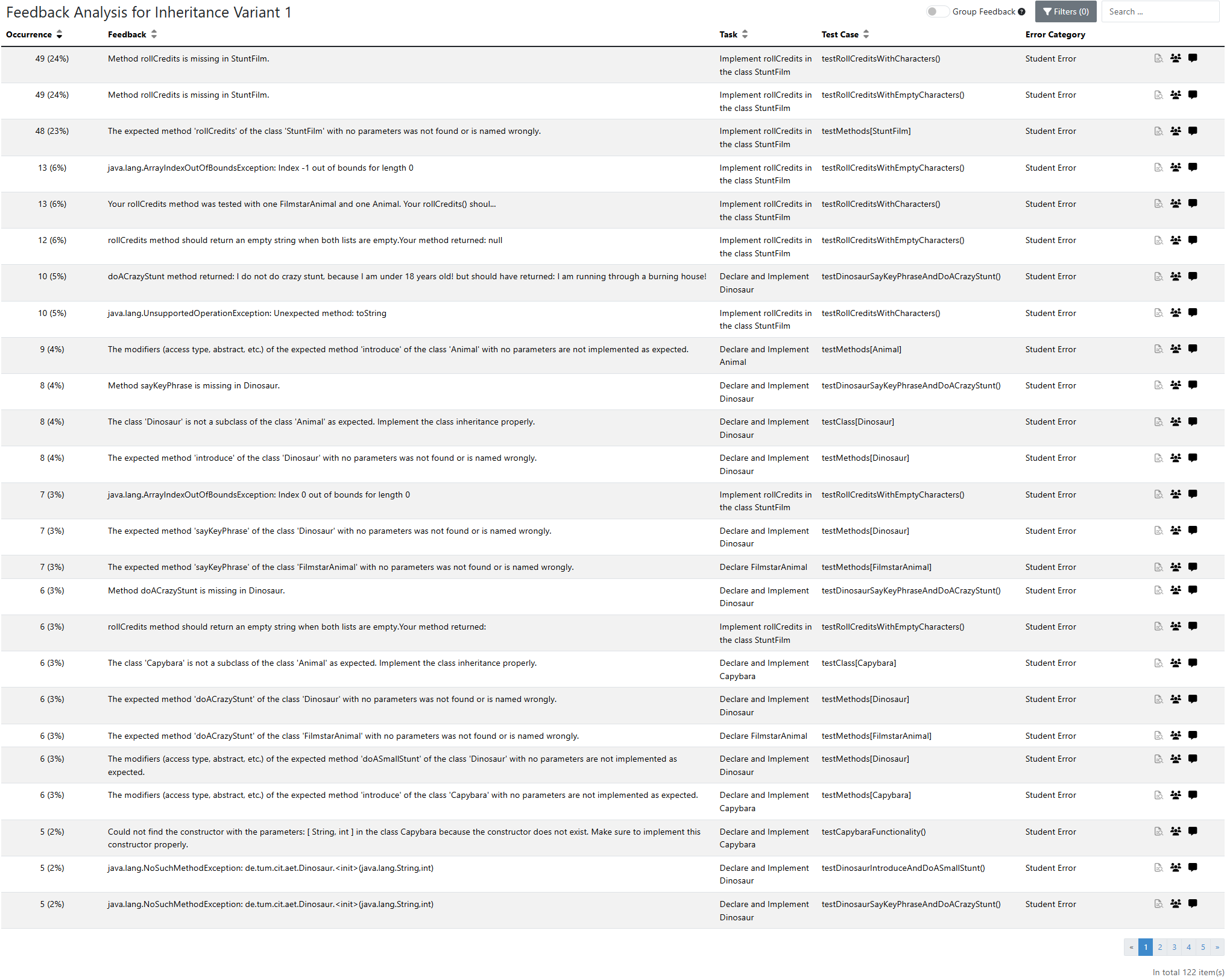

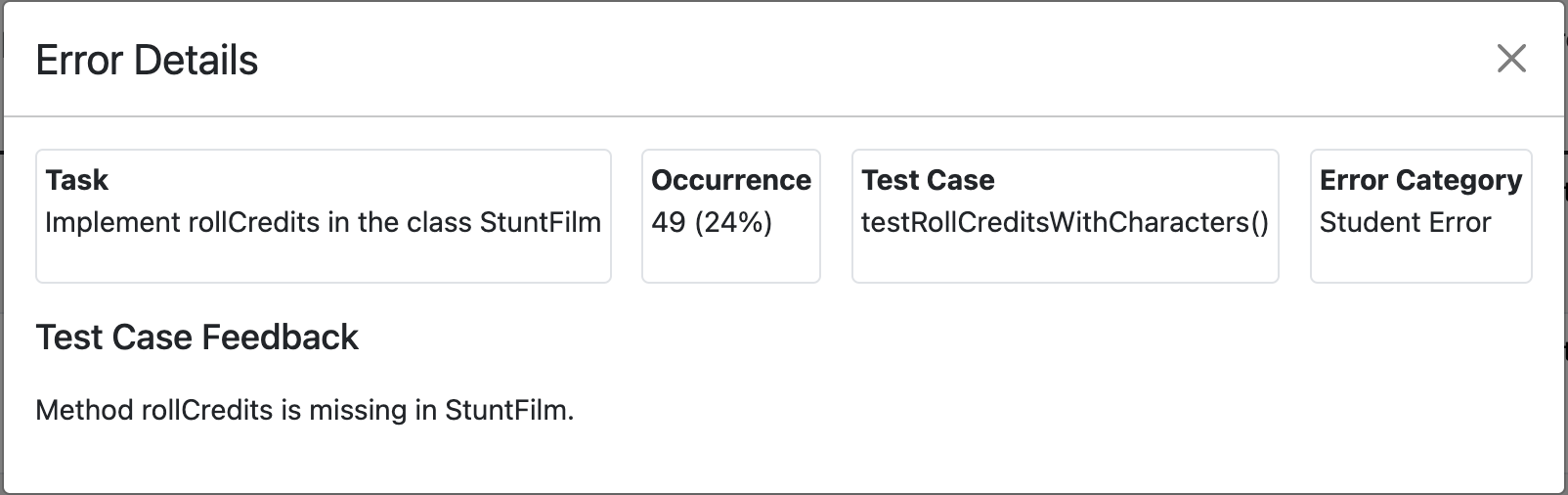

-

Filtering and Sorting

Filter by tasks, test cases, error categories, and occurrence frequency. Sort based on count or relevance.

Filtering Options -

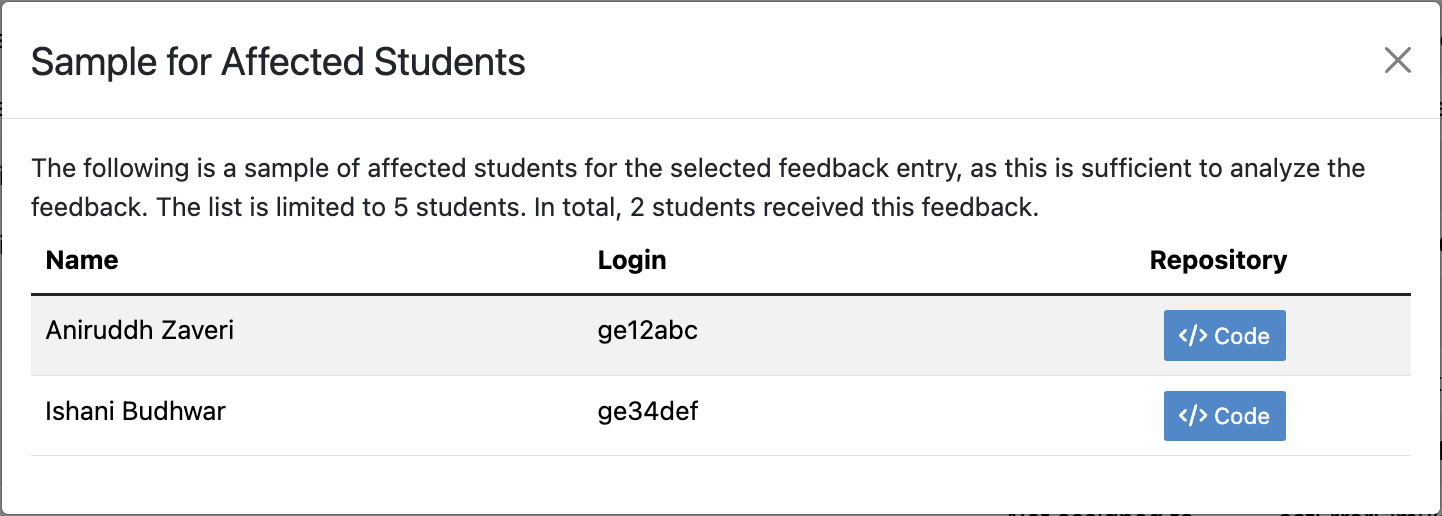

Affected Students Overview

View students affected by specific feedback and access their repositories for review.

Affected Students View -

Aggregated Feedback

- To avoid many single entries in case of testcases using random values and generating similar feedback, Groups similar feedback messages together all feedback to highlight frequently occurring errors.

- Displays grouped occurrence counts and relative frequencies to help instructors prioritize common issues.

-

Detailed Inspection

Click feedback entries to view full details in a modal window.

Detailed Feedback View -

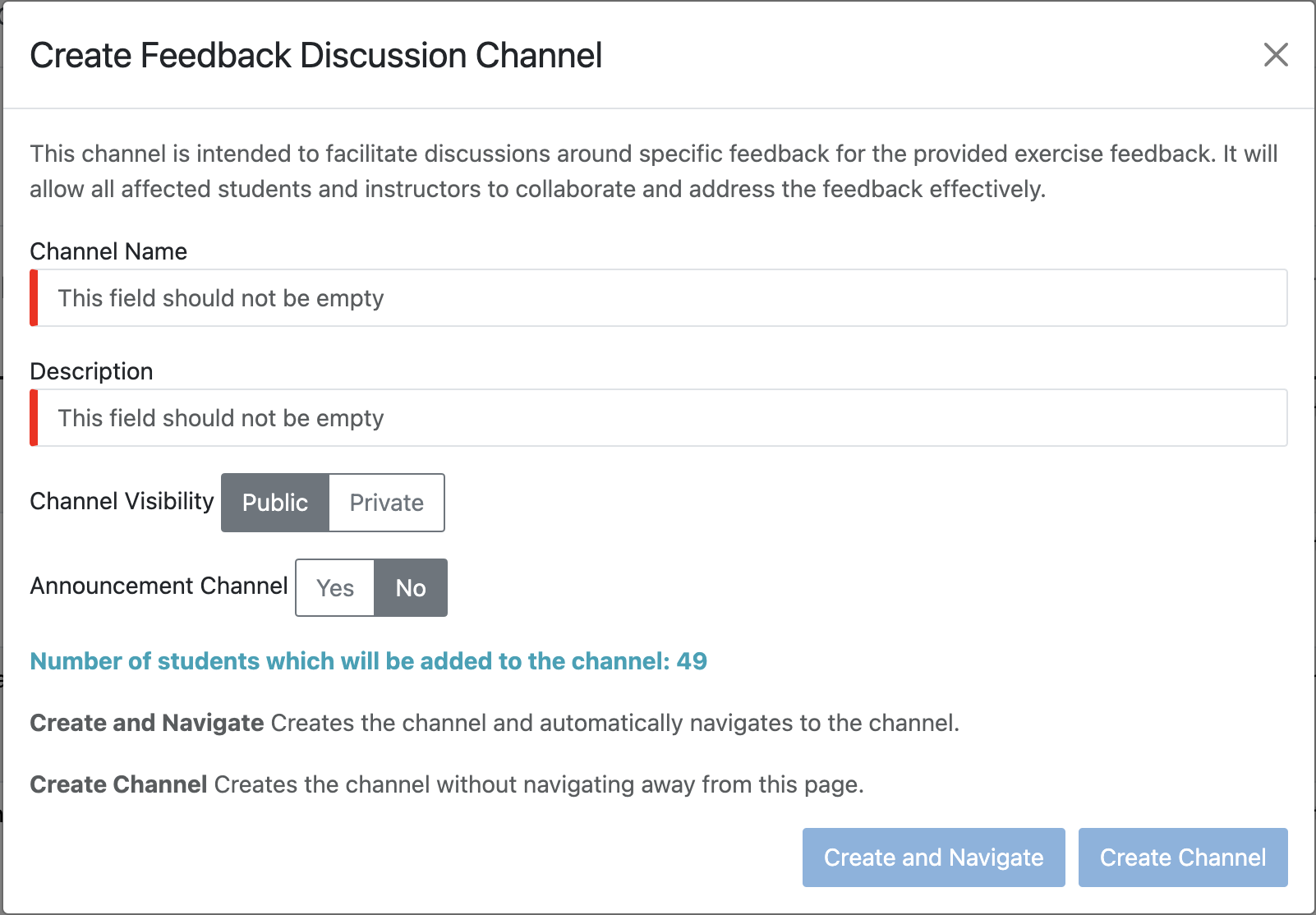

Communication & Collaboration

Create discussion channels directly from the feedback analysis view for collaborative grading.

Creating a Feedback Discussion Channel

Static Code Analysis Default Configuration

The following sections list the rules that are active for the default static code analysis configuration.

Java

Spotbugs

All tool categories and their rules are active by default except for the NOISE and EXPERIMENTAL category. Refer to the Spotbugs documentation for a description of all rules.

Checkstyle

This table contains all rules that are activated by default when creating a new programming exercise. You can suppress a complete category by changing its visibility in the grading settings. For a more fine-granular configuration, you can add or remove rules by editing the checkstyle-configuration.xml file. For a description of the rules refer to the Checkstyle documentation.

| Category (Tool/Artemis) | Rule | Properties |

|---|---|---|

| Coding / Code Style | EmptyStatement | |

| EqualsHashCode | ||

| HiddenField | ignoreConstructorParameter="true" | |

| ignoreSetter="true" | ||

| setterCanReturnItsClass="true" | ||

| IllegalInstantiation | ||

| InnerAssignment | ||

| MagicNumber | ||

| MissingSwitchDefault | ||

| MultipleVariableDeclarations | ||

| SimplifyBooleanExpression | ||

| SimplifyBooleanReturn | ||

| Class Design / Design | FinalClass | |

| HideUtilityClassConstructor | ||

| InterfaceIsType | ||

| VisibilityModifier | ||

| Block Checks / Code Style | AvoidNestedBlocks | |

| EmptyBlock | ||

| NeedBraces | ||

| Modifiers / Code Style | ModifierOrder | |

| RedundantModifier | ||

| Size Violations / Code Metrics | MethodLength | |

| ParameterNumber | ||

| FileLength | ||

| LineLength | max="120" | |

| Imports / Naming & Formatting | IllegalImport | |

| RedundantImport | ||

| UnusedImports | processJavadoc="false" | |

| Naming Conventions / Naming & Formatting | ConstantName | |

| LocalFinalVariableName | ||

| LocalVariableName | ||

| MemberName | ||

| MethodName | ||

| ParameterName | ||

| TypeName | ||

| Whitespace / Naming & Formatting | EmptyForIteratorPad | |

| GenericWhitespace | ||

| MethodParamPad | ||

| NoWhitespaceAfter | ||

| NoWhitespaceBefore | ||

| OperatorWrap | ||

| ParenPad | ||

| TypecastParenPad | ||

| WhitespaceAfter | ||

| WhitespaceAround | ||

| Javadoc Comments / Documentation | InvalidJavadocPosition | |

| JavadocMethod | ||

| JavadocType | ||

| JavadocStyle | ||

| MissingJavadocMethod | allowMissingPropertyJavadoc="true" | |

| allowedAnnotations="Override,Test" | ||

| tokens="METHOD_DEF,ANNOTATION_FIELD_DEF,COMPACT_CTOR_DEF" | ||

| Miscellaneous / Miscellaneous | ArrayTypeStyle | |

| UpperEll | ||

| NewlineAtEndOfFile | ||

| Translation |

PMD

For a description of the rules refer to the PMD documentation.

| Category (Tool/Artemis) | Rule |

|---|---|

| Best Practices / Bad Practice | AvoidUsingHardCodedIP |

| CheckResultSet | |

| UnusedFormalParameter | |

| UnusedLocalVariable | |

| UnusedPrivateField | |

| UnusedPrivateMethod | |

| PrimitiveWrapperInstantiation | |

| Code Style / Code Style | UnnecessaryImport |

| ExtendsObject | |

| ForLoopShouldBeWhileLoop | |

| TooManyStaticImports | |

| UnnecessaryFullyQualifiedName | |

| UnnecessaryModifier | |

| UnnecessaryReturn | |

| UselessParentheses | |

| UselessQualifiedThis | |

| EmptyControlStatement | |

| Design / Design | CollapsibleIfStatements |

| SimplifiedTernary | |

| UselessOverridingMethod | |

| Error Prone / Potential Bugs | AvoidBranchingStatementAsLastInLoop |

| AvoidDecimalLiteralsInBigDecimalConstructor | |

| AvoidMultipleUnaryOperators | |

| AvoidUsingOctalValues | |

| BrokenNullCheck | |

| CheckSkipResult | |

| ClassCastExceptionWithToArray | |

| DontUseFloatTypeForLoopIndices | |

| ImportFromSamePackage | |

| JumbledIncrementer | |

| MisplacedNullCheck | |

| OverrideBothEqualsAndHashcode | |

| ReturnFromFinallyBlock | |

| UnconditionalIfStatement | |

| UnnecessaryConversionTemporary | |

| UnusedNullCheckInEquals | |

| UselessOperationOnImmutable | |

| Multithreading / Potential Bugs | AvoidThreadGroup |

| DontCallThreadRun | |

| DoubleCheckedLocking | |

| Performance / Performance | BigIntegerInstantiation |

| Security / Security | All rules |

PMD CPD

Artemis uses the following default configuration to detect code duplications for the category Copy/Paste Detection. For a description of the various PMD CPD configuration parameters refer to the PMD CPD documentation.

<!-- Minimum amount of duplicated tokens triggering the copy-paste detection -->

<minimumTokens>60</minimumTokens>

<!-- Ignore literal value differences when evaluating a duplicate block.

If true, foo=42; and foo=43; will be seen as equivalent -->

<ignoreLiterals>true</ignoreLiterals>

<!-- Similar to ignoreLiterals but for identifiers, i.e. variable names, methods names.

If activated, most tokens will be ignored, so minimumTokens must be lowered significantly -->

<ignoreIdentifiers>false</ignoreIdentifiers>

C

GCC

For a description of the rules/warnings refer to the GCC Documentation. For readability reasons the rule/warning prefix -Wanalyzer- is omitted.

| Category (Tool/Artemis) | Rule |

|---|---|

| Memory Management / Potential Bugs | free-of-non-heap |

| malloc-leak | |

| file-leak | |

| mismatching-deallocation | |

| Undefined Behavior / Potential Bugs | double-free |

| null-argument | |

| use-after-free | |

| use-of-uninitialized-value | |

| write-to-const | |

| write-to-string-literal | |

| possible-null-argument | |

| possible-null-dereference | |

| Bad Practice / Bad Practice | double-fclose |

| too-complex | |

| stale-setjmp-buffer | |

| Security / Security | exposure-through-output-file |

| unsafe-call-within-signal-handler | |

| use-of-pointer-in-stale-stack-frame | |

| tainted-array-index | |

| Miscellaneous / Miscellaneous | Rules not matching to above categories |

GCC output can still contain normal warnings and compilation errors. These will also be added to the Miscellaneous category. Usually it's best to disable this category, as it contains errors not related to the SCA. Therefore, if the warning/error does not belong to the first four categories above, it is not an SCA issue as of GCC 11.1.0.

Exercise Import

Exercise import copies repositories, build plans, interactive problem statement, and grading configuration from an existing exercise.

Import Steps

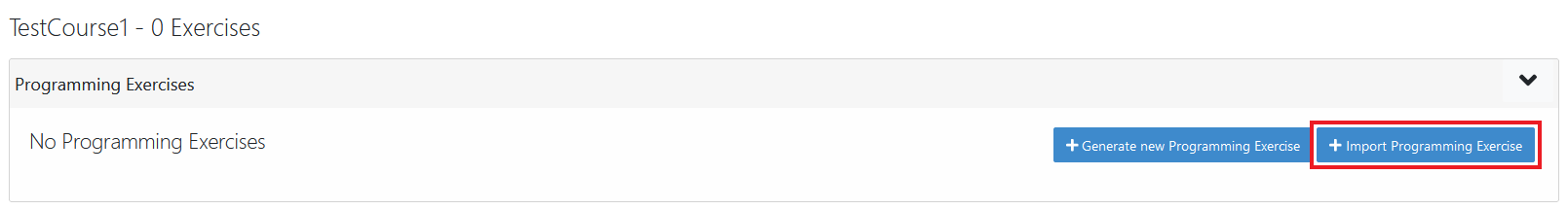

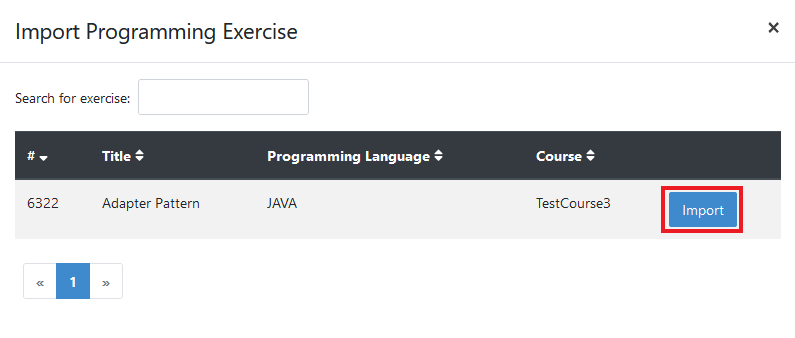

-

Open Course Management

Open

and navigate to Exercises of your preferred course.

Course Management - Exercises -

Import Programming Exercise

Click Import Programming Exercise.

Import Programming Exercise Button Select an exercise to import.

Exercise Selection Modal ℹ️Instructors can import exercises from courses where they are registered as instructors.

-

Configure Import Options

Import Options - Recreate Build Plans: Create new build plans instead of copying from imported exercise

- Update Template: Update template files in repositories. Useful for outdated exercises. For Java, replaces JUnit4 with Ares (JUnit5) and updates dependencies. May require test case adaptation

ℹ️Recreate Build Plans and Update Template are automatically set if the static code analysis option changes compared to the imported exercise. The plugins, dependencies, and static code analysis tool configurations are added/deleted/copied depending on the new and the original state of this option.

-

Complete Import

Fill mandatory values and click

.

ℹ️The interactive problem statement can be edited after import. Some options like Sequential Test Runs cannot be changed on import.

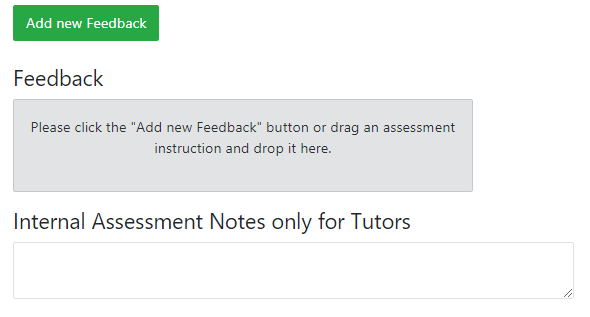

Manual Assessment

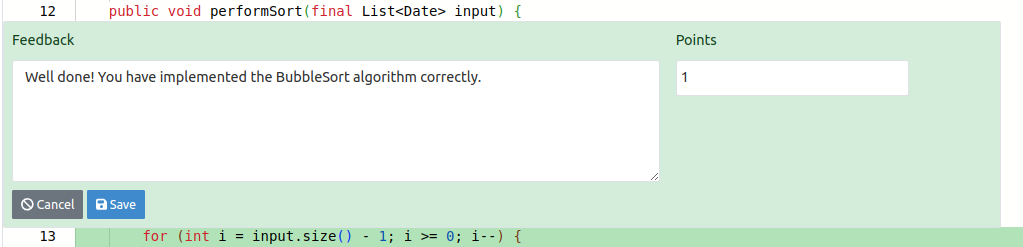

You can use the online editor to assess student submissions directly in the browser.

The online editor provides features tailored to assessment:

- File browser: Shows student submission files. Changed files are highlighted in yellow

- Build output: Shows build process output, useful for build errors

- Read-only editor: View student code with changed lines highlighted

- Instructions: Provides structured grading criteria and problem statement, including tasks successfully solved (determined by test cases). Review test cases by clicking the passing test count next to tasks

- Result: Top right corner shows current submission result. Click to review test cases and feedback

Add feedback directly in source code by hovering over a line and clicking . Alternatively, press "+" key when editor is focused to open feedback widget at cursor line.

After clicking :

- Enter feedback comment and score

- Or drag structured assessment criteria from instructions to feedback area

- Click

to save

- Click

to discard

Add general feedback (not tied to specific file/line) by scrolling to bottom and clicking .

Save changes with (top right). Finalize assessment with

.

You can save multiple times before submitting. Once submitted, you cannot make changes unless you are an instructor.

Repository Access Configuration

If you are a student looking for repository access information, see the student documentation on integrated code lifecycle setup.

The following sections explain repository types and access rights for different user roles.

Repository Types

| Repository Type | Description |

|---|---|

| Base | Repositories set up during exercise creation (template, solution, tests, auxiliary repositories) |

| Student Assignment | Student's assignment repository copied from template. Includes team assignments |

| Teaching Assistant Assignment | Assignment repository created by a Teaching Assistant for themselves |

| Instructor Assignment | Assignment repository created by editor/instructor. Not available for exam exercises |

| Student Practice | Student's practice repository (from template or assignment). Created after due date. Not available for exams |

| Teaching Assistant Practice | Practice repository created by Teaching Assistant. Not available for exams |

| Instructor Practice | Practice repository created by editor/instructor. Not available for exams |

| Instructor Exam Test Run | Test run repository for exam testing before release. Should be deleted before exam |

User Roles

| Role | Description |

|---|---|

| Student | Course student |

| Teaching Assistant | Course tutor |

| Editor | Course editor |

| Instructor | Course instructor |

Editors and instructors have the same access rights in the table below.

Time Periods

- Before start: Before exercise/exam start date

- Working time: After start date, before due/end date

- After due: After due/end date

For Instructor Exam Test Run, "Before start" is the test run start date and "After due" is the test run end date (both before exam start).

Read access (R) includes git fetch, git clone, git pull.

Write access (W) corresponds to git push.

Access Rights Table

| Repository Type | Role | Time Period | Access |

|---|---|---|---|

| Base | Student | all | none |

| Teaching Assistant | all | R | |

| Instructor | all | R/W | |

| Student Assignment | Student | Before start | none |

| Student | Working time | R/W | |

| Student | After due | R¹ | |

| Teaching Assistant | all | R | |

| Instructor | all | R/W | |

| Teaching Assistant Assignment | Student | all | none |

| Teaching Assistant | Before start | R | |

| Teaching Assistant | Working time | R/W | |

| Teaching Assistant | After due | R | |

| Instructor | all | R/W | |

| Instructor Assignment | Student | all | none |

| Teaching Assistant | all | R | |

| Instructor | all | R/W² | |

| Student Practice | Student | Before start | none |

| Student | Working time | none | |

| Student | After due | R/W | |

| Teaching Assistant | Before start | none | |

| Teaching Assistant | Working time | none | |

| Teaching Assistant | After due | R | |

| Instructor | Before start | none | |

| Instructor | Working time | none | |

| Instructor | After due | R/W | |

| Teaching Assistant Practice | Student | all | none |

| Teaching Assistant | Before start | none | |

| Teaching Assistant | Working time | none | |

| Teaching Assistant | After due | R/W | |

| Instructor | Before start | none | |

| Instructor | Working time | none | |

| Instructor | After due | R/W | |

| Instructor Practice | Student | all | none |

| Teaching Assistant | Before start | none | |

| Teaching Assistant | Working time | none | |

| Teaching Assistant | After due | R | |

| Instructor | Before start | none | |

| Instructor | Working time | none | |

| Instructor | After due | R/W | |

| Instructor Exam Test Run | Student | all | none |

| Teaching Assistant | all | R | |

| Instructor | all | R/W |

Notes:

-

Only valid for course exercises. Students cannot read exam exercise repositories after due date.

-

Instructors can access Instructor Assignment repository via online editor from Course Management (Edit in editor) or Course Overview (Open code editor). After due date, push only via online editor from Course Management or local Git client. Online editor from Course Overview shows locked repository.

Practice repositories, Teaching Assistant assignment, and instructor assignment repositories only exist for course exercises.

Testing with Ares

Ares is a JUnit 5 extension for easy and secure Java testing on Artemis.

Main features:

- Security manager to prevent students from crashing tests or cheating

- More robust tests and builds with limits on time, threads, and I/O

- Support for public and hidden Artemis tests with custom due dates

- Utilities for improved feedback (multiline error messages, exception location hints)

- Utilities to test exercises using System.out and System.in

For more information see Ares GitHub

Best Practices for Writing Test Cases

The following sections describe best practices for writing test cases. Examples are specifically for Java (using Ares/JUnit5), but practices can be generalized for other languages.

General Best Practices

Write Meaningful Comments for Tests

Comments should contain:

- What is tested specifically

- Which task from problem statement is addressed

- How many points the test is worth

- Additional necessary information

Keep information consistent with Artemis settings like test case weights.

/**

* Tests that borrow() in Book successfully sets the available attribute to false

* Problem Statement Task 2.1

* Worth 1.5 Points (Weight: 1)

*/

@Test

public void testBorrowInBook() {

// Test Code

}

Better yet, use comments in display names for manual correction:

@DisplayName("1.5 P | Books can be borrowed successfully")

@Test

public void testBorrowInBook() {

// Test Code

}

Use Appropriate and Descriptive Names for Test Cases

Test names are used for statistics. Avoid generic names like test1, test2, test3.

@Test

public void testBorrowInBook() {

// Test Code

}

If tests are in different (nested) classes, add class name to avoid duplicates:

@Test

public void test_LinkedList_add() {

// Test Code

}

For Java: If all test methods are in a single class, this is unnecessary (compiler won't allow duplicate methods).

Use Appropriate Timeouts for Test Cases

For regular tests, @StrictTimeout(1) (1 second) is usually sufficient. For shorter timeouts:

@Test

@StrictTimeout(value = 500, unit = TimeUnit.MILLISECONDS)

public void testBorrowInBook() {

// Test Code

}

Can also be applied to entire test class.

Remember tests run on CI servers (build agents). Tests execute slower than on local machines.

Avoid Assert Statements

Use conditional fail() calls instead to hide confusing information from students.

❌ Not recommended:

@Test

public void testBorrowInBook() {

Object book = newInstance("Book", 0, "Some title");

invokeMethod(book, "borrow");

assertFalse((Boolean) invokeMethod(book, "isAvailable"),

"A borrowed book must be unavailable!");

}

Shows: org.opentest4j.AssertionFailedError: A borrowed book must be unavailable! ==> Expected <false> but was <true>

✅ Recommended:

@Test

public void testBorrowInBook() {

Object book = newInstance("Book", 0, "Some title");

invokeMethod(book, "borrow");

if ((Boolean) invokeMethod(book, "isAvailable")) {

fail("A borrowed book is not available anymore!");

}

}

Shows: org.opentest4j.AssertionFailedError: A borrowed book is not available anymore!

Write Tests Independent of Student Code

Students can break anything. Use reflective operations instead of direct code references.

❌ Not recommended (causes build errors):

@Test

public void testBorrowInBook() {

Book book = new Book(0, "Some title");

book.borrow();

if (book.isAvailable()) {

fail("A borrowed book must be unavailable!");

}

}

✅ Recommended (provides meaningful errors):

@Test

public void testBorrowInBook() {

Object book = newInstance("Book", 0, "Some title");

invokeMethod(book, "borrow");

if ((Boolean) invokeMethod(book, "isAvailable")) {

fail("A borrowed book must be unavailable!");

}

}

Error message: The class 'Book' was not found within the submission. Make sure to implement it properly.

Check for Hard-Coded Student Solutions

Students may hardcode values to pass specific tests. Verify solutions fulfill actual requirements, especially in exams.

Avoid Relying on Specific Task Order

Tests should cover one aspect without requiring different parts to be implemented.

Example: Testing translate and runService methods where runService calls translate.

❌ Not recommended (assumes translate is implemented):

@Test

public void testRunServiceInTranslationServer() {

String result = translationServer.runService("French", "Dog");

assertEquals("Dog:French", result);

}

✅ Recommended (overrides translate to test runService independently):

@Test

public void testRunServiceInTranslationServer() {

TranslationServer testServer = new TranslationServer() {

public String translate(String word, String language) {

return word + ":" + language;

}

};

String expected = "Dog:French";

String actual = testServer.runService("French", "Dog");

if(!expected.equals(actual)) fail("Descriptive fail message");

}

Handle students making classes/methods final via problem statement or tests to avoid compilation errors.

Catch Possible Student Errors

Handle student mistakes appropriately. For example, null returns can cause NullPointerException.

@Test

public void testBorrowInBook() {

Object book = newInstance("Book", 0, "Some title");

Object result = invokeMethod(book, "getTitle");

if (result == null) {

fail("getTitle() returned null!");

}

// Continue with test

}

Java Best Practices

Use Constant String Attributes for Base Package

Avoid repeating long package identifiers:

private static final String BASE_PACKAGE = "de.tum.in.ase.pse.";

@Test

public void testBorrowInBook() {

Object book = newInstance(BASE_PACKAGE + "Book", 0, "Some title");

// Test Code

}

Use JUnit5 and Ares Features

More information: JUnit5 Documentation and Ares GitHub

Useful features:

- Nested Tests to group tests

@Orderto define custom test execution orderassertDoesNotThrowfor exception handling with custom messagesassertAllto aggregate multiple assertion failures- Dynamic Tests for special needs

- Custom extensions

- JUnit Platform Test Kit for testing tests

Define Custom Annotations

Combine annotations for better readability:

@Test

@StrictTimeout(10)

@Retention(RetentionPolicy.RUNTIME)

@Target({ElementType.METHOD})

public @interface LongTest {

}

Consider Using jqwik for Property-Based Testing

jqwik allows testing with arbitrary inputs and shrinks errors to excellent counter-examples (usually edge cases).

Eclipse Compiler and Best-Effort Compilation

Use Eclipse Java Compiler for partial, best-effort compilation. Useful for exam exercises and complicated generics.

Compilation errors are transformed into errors thrown where code doesn't compile (method/class level). The body is replaced with throw new Error("Unresolved compilation problems: ...").

Important: Only method/class bodies should fail to compile, not complete test classes. Anything outside method/nested class bodies must compile, including method signatures, return types, parameter types, and lambdas. Use nested classes for fields/methods with student class types that might not compile.

The Eclipse Compiler may not support the latest Java version. You can compile student code with the latest Java and test code with the previous version.

See old documentation for Maven configuration examples.

Common Pitfalls / Problems

- Reflection API limitation: Constant attributes (static final primitives/Strings) are inlined at compile-time, making them impossible to change at runtime

- Long output: Arrays or Strings with long output may be unreadable or truncated after 5000 characters

Submission Policy Configuration

Submission policies define the effect of a submission on participant progress. A programming exercise can have zero or one submission policy (never more than one). Policies are specified during exercise creation and can be adjusted in the grading configuration later.

Definition: One submission = one push to the exercise repository by the participant that triggers automatic tests resulting in feedback. Automatic test runs triggered by instructors are not counted as submissions.

Submission Policy Types

Choosing the right policy depends on the exercise and teaching style. Lock repository and submission penalty policies combat trial-and-error solving approaches.

1. None

No submission policy. Participants can submit as often as they want until the due date.

2. Lock Repository

Participants can submit a fixed number of times. After reaching the limit, the repository is locked and further submissions are prevented.

With the example configuration shown above, participants can submit 5 times. After the 5th submission, Artemis locks the repository, preventing further pushes.

If locking fails and the participant submits again, Artemis attempts to lock again and sets the new result to .

The participant may still work on their solution locally, but cannot submit it to Artemis to receive feedback.

3. Submission Penalty

Participants can submit as often as they want. For each submission exceeding the limit, the penalty is deducted from the score.

With the example configuration above:

- First 3 submissions: no penalty

- 4th submission: 1.5 points deducted

- 5th submission: 3 points deducted (1.5 × 2 submissions exceeding limit)

- Score cannot be negative

Example: Student achieves 6 out of 12 points on 4th submission. With 1.5 point penalty, final score is 4.5 out of 12.

Students receive feedback explaining the deduction:

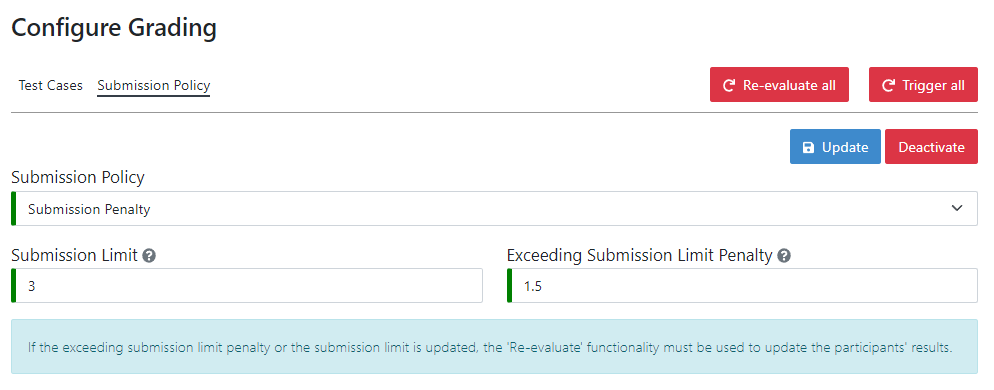

Updating Submission Policies

After generating an exercise, submission policies can be updated on the grading page.

(De)activating Submission Policies

- Active policy: Shows

button

- Inactive policy: Shows

button

When deactivated, Artemis no longer enforces the policy. Locked repositories are unlocked. For submission penalty policies, press to apply changes.

Updating Submission Policies

Modify configuration and press . The effect of the former policy is removed and the new policy is applied. For submission penalty policies, press

to update results.

Deleting Submission Policies

Select None as policy type and press . Locked repositories are unlocked. For submission penalty policies, press

to revert effects.

Java DejaGnu: Blackbox Testing

Classical testing frameworks like JUnit allow writing whitebox tests, which enforce assumptions about code structure (class names, method names, signatures). This requires specifying all structural aspects for tests to run on student submissions. That may be okay or even desired for a beginner course.

For advanced courses, this is a downside: students cannot make their own structural decisions and gain experience in this important programming aspect.

DejaGnu enables blackbox tests for command line interfaces. Tests are written in Expect Script (extension of Tcl). Expect is a Unix utility for automatic interaction with programs exposing text terminal interfaces in a robust way.

Test scripts:

- Start the program as a separate process (possibly several times)

- Interact via textual inputs (standard input)

- Read outputs and make assertions (exact or regex matching)

- Decide next inputs based on output, simulating user interaction

For exercises, only specify:

- Command line interface syntax

- Rough output format guidance

Source code structure is up to students as far as you want.

Source code structure quality assessment can be done manually after submission deadline. The template uses Maven to compile student code, so it can be extended with regular unit tests (e.g., architecture tests for cyclic package dependencies) and report the results for both to the student.

Usage: Consult official documentation and initial test-repository content. DejaGnu files are in the testsuite directory. The ….tests directory contains three example test execution scripts.

Example: PROGRAM_TEST {add x} {} puts "add x" into the program and expects no output.

Helper functions like PROGRAM_TEST are defined in config/default.exp.

Variables in SCREAMING_SNAKE_CASE (e.g., MAIN_CLASS) are replaced with actual values in previous build plan steps. For example, the build plan finds the Java class with main method and replaces MAIN_CLASS.

Best Expect documentation: Exploring Expect book. The Artemis default template contains reusable helper functions in config/default.exp for common I/O use cases.

This exercise type makes it quite easy to reuse existing exercises from the Praktomat autograder system.

Sending Feedback back to Artemis

By default, unit test results are extracted and sent to Artemis automatically. Only custom setups may need semi-automatic approaches.

Jenkins

In Jenkins CI, test case feedback is extracted from XML files in JUnit format. The Jenkins plugin reads files from a results folder in the Jenkins workspace top level. Regular unit test files are copied automatically.

For custom test case feedback, create a customFeedbacks folder at workspace top level. In this folder, create JSON files for each test case feedback:

{

"name": string,

"successful": boolean,

"message": string

}

- name: Test case name as shown on 'Configure Grading' page. Must be non-null and non-empty

- successful: Indicates test case success/failure. Defaults to false if not present

- message: Additional information shown to student. Required for non-successful tests, optional otherwise

Integrated Code Lifecycle

The Artemis Integrated Code Lifecycle allows using programming exercises fully integrated within Artemis, without external tools. Find more information in the Integrated Code Lifecycle documentation.